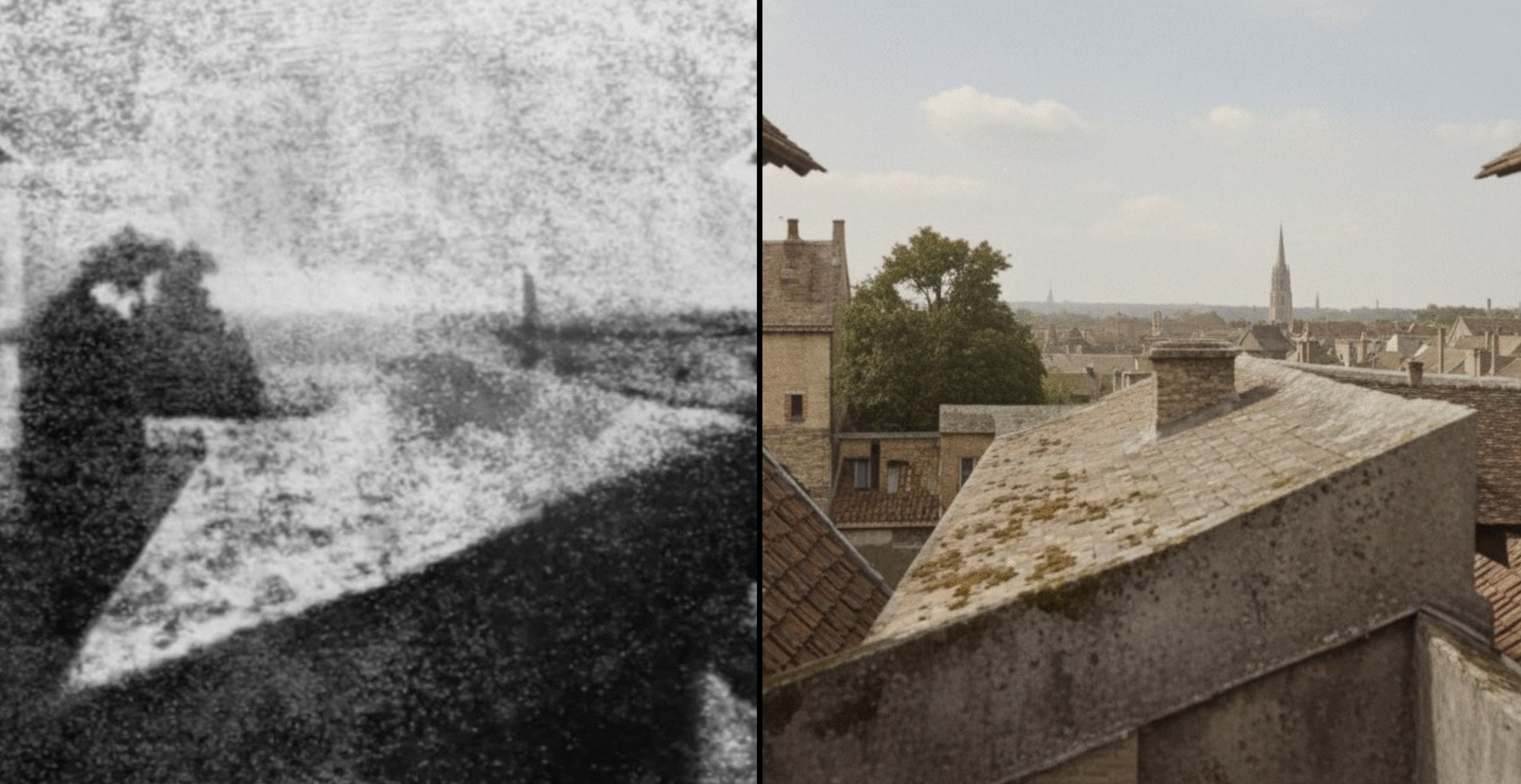

‘View from the Window at Le Gras’ is the world’s earliest surviving photograph, taken by Joseph Nicéphore Niépce in 1827.

His process was painstaking. He polished a pewter plate, coated it with a light-sensitive solution of bitumen dissolved in lavender oil, and placed it in a camera obscura overlooking his estate at Le Gras. After several days of exposure, sunlight hardened parts of the coating while shadows stayed soft. He then washed the plate with lavender oil and petroleum to dissolve the unhardened bitumen, before fixing and drying it to preserve the result, leaving behind a faint but permanent image.

The first ever photograph in human history:

Then, 198 years later, a Reddit user downloaded a digital copy of this image, pasted it into ChatGPT, asked it to ‘restore and colorize’, and then posted it side by side with the original. The caption read: ‘pretty cool attention to detail’. It took all of 10 minutes.

It reached the front page of /r/ChatGPT and received nearly 6,000 upvotes. The comments were effusive in their praise, and some even posted their own versions, with an updated process of how to get a ‘better result’: “I prompted it to check historical data to compensate the lack of information in the original image. Layout is better but most improvements are on the color and material.”

But one comment from Significant_Poem_751 stood out to me (emphasis mine):

“totally inaccurate rendering -- and that is not surprising. i teach history of photography, so i know this photograph very well…the big triangle shape in the center is not another building or rooftop or any object…so don't get so excited about the "colorization" of this iconic image that literally changed the world.

Anyone who actually wants to know about this can easily look it up, but please use valid sources and not AI -- when i ask AI for info on the history of photography, it's 50/50 accurate/false…Just please don't make garbage out of something real, and significant. not everything needs to be used as a parlor game.”

Significant_Poem_751 is clearly livid. But it’s a righteous anger, because he is witnessing his passion and profession being trampled on in real time. A trip through his Reddit history reveals he’s not anti-AI either, he’s often in the ChatGPT subreddit and is quite bullish about the overall concept. But the breezy arrogance of the original post is clearly an affront to him.

Because as he alludes to, we don’t need this reproduction even if it was accurate. We already have a pretty good idea what a colorized and restored version of this photograph would look like, and we didn’t need generative AI to do it.

In July 1999, researchers from Spéos International Photography School in Paris, led by Jean-Louis Marignier, went to work in Niepce’s original house in order to restore the first ever point de vue.

They excavated his workshop, analysing the plaster and wood to discover modifications over time. They found images burnt onto the floor, literal fossils of his life’s work. Eventually, through carbon dating, they found the original spot in the original room where this heliograph was taken. From there, they used land registry records to work out which buildings, now long gone, would have been visible at the time. Then, they recreated the image in a computer programme, now old enough to be in a museum itself, and flipped and overlaid the photograph to recreate the exact view.

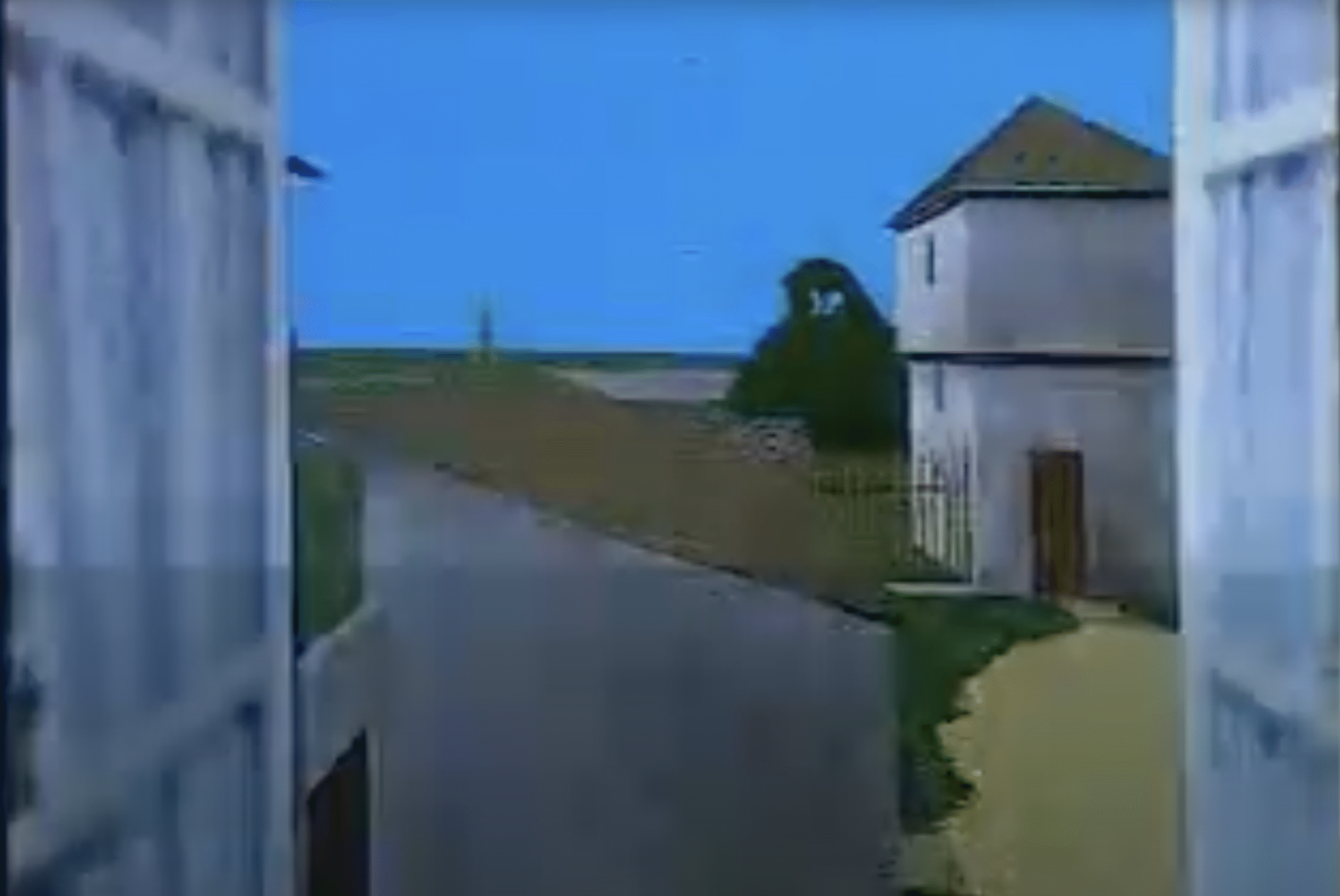

The entire process took the better part of a year, but they had their answer:

The collected expertise of hundreds of years of study, leveraging cutting-edge techniques, all bound by love of the historical provenance that shows the birth of a medium. It was a fantastic bit of practical history and academic research. Thorough, proper, work which ensured that the house, now the Musée Maison Nicéphore Niépce, can be treated as a pilgrimage spot for photographers the world over.

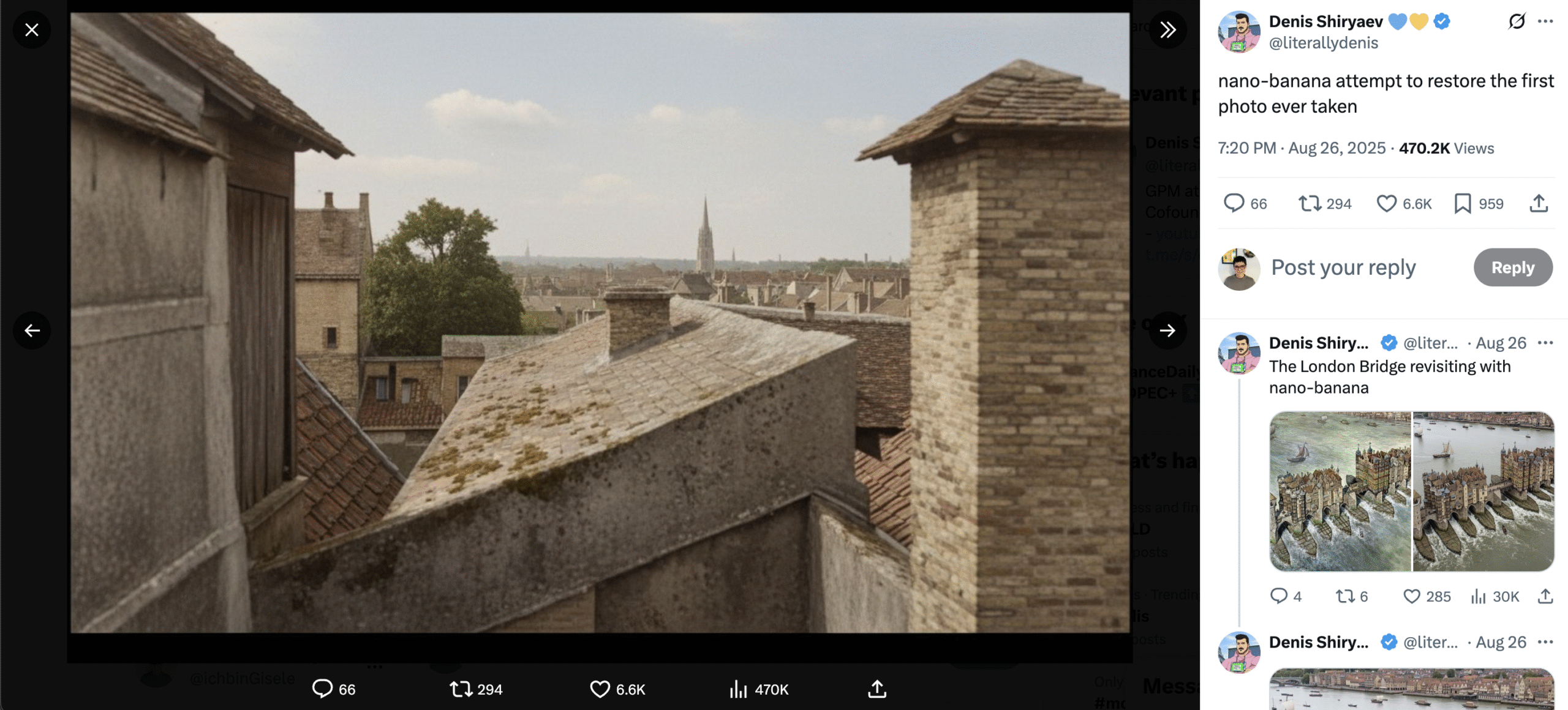

Now, compare this recreation to a more recent AI viral moment, this time using Google’s Nano Banana:

It is even more wrong than the previous attempt. Its constructions defy physics. It relocated the building to a city, turned a tree into a cathedral and the window frames into chimneys.

It also got over 470.000 views in less than two weeks. The documentary on YouTube that details the painstaking work that multiple organizations did has gotten only 30,000 views in 18 years. Partly because the process was slow and buried in the middle of a 10 minute 240p video. After all, only the true Niepce-heads want to see someone excavate a 19th century floorboard. Unlike Gemini, they spent months simulating the courtyard and valued accuracy over speed.

I don’t blame the tool. It’s doing its best. The fact that the technology can even spit something back to you that is as realistic as this is objectively incredible. That you can then ask it to do the same thing in cyberpunk, kawaii, or overlay a Star Destroyer would melt Niépce’s brain. This power at the end of a prompt is why these posts do so well.

But crucially, the reaction here is tied to the assumption that it is doing a good job of restoring the original image. They are impressed at how it has ‘improved’ the first photograph ever taken. They’ve assumed it’s done a better job than any experts up until now, which misses the point.

The 1827 original has allure precisely because of how groundbreaking it was. It’s a 200-year-old mystery that enthusiasts and experts have dedicated their life to understanding and reconstructing. What the shadows obscure is interesting, precisely because it takes so much work to reach into the past to try to illuminate them.

Flattening the world, one prompt at a time

The disconnect between truth and quality is one of the fundamental problems with AI-worship.

Ask the tools about something you are an expert in yourself, or even something you have a passing interest in as a hobby, and you soon find that it’s a lot shallower than it seems.

There are people who will say that this doesn’t matter. That it will only get better. That the details are unimportant and the sheer spectacle is what deserves its praise. But this is the thin end of a wedge of disinformation and erasure of expertise.

I know this experience first-hand. At various points in the last few years I tested LLMs in a variety of ways, including using them for design and development shortcuts on creative campaigns. Though initially impressive, they weren’t good enough in fully realising what we do to actually add value.

Because, crucially, I knew what the bar should be. I knew the quality that my clients and journalists would expect. And, even though it might be possible, it wasn’t at a level that should be out there.

But not everyone’s bar is the same.

Maybe actual photography experts had already tried to use AI to recreate the original photograph, and, seeing how far short it fell, left it in the drafts. But the people who don’t care about the work, who just want more slop for the trough, didn’t know enough not to share it. Nor did the hundreds of thousands of people who subsequently saw it.

Each time that happens, the bar lowers further. It erases expertise, prioritises shortcuts and devalues craft and passion.

The expression says that ‘A lie gets halfway around the world while the truth is putting on its shoes.’ Today, AI gets halfway around the world in half the time, flattening the very things that made these moments magical in the first place.