At NeoMam, we take great pride in the work of our team.

Since 2011, we’ve launched more than 3,000 creative campaigns, many of which have crossed the line between online and offline: our work is currently touring the world as part of an exhibit from the V&A museum and you could buy our creations in the form of prints at the Frank Lloyd Wright Foundation, or online in the form of collectible silver coins.

However, as a digital-first business, the fruits of our labour mostly live and die on the web. Yet, in 2025, the way we work could make some people label us “Luddites.”

We sat idly these past couple years while marketing influencers and business leaders boasted about how they use AI to automate every single task our team performs.

It has gotten to the point where there are now “agencies” selling 100% AI packages designed for other agencies to offload the very same services clients pay them to complete.

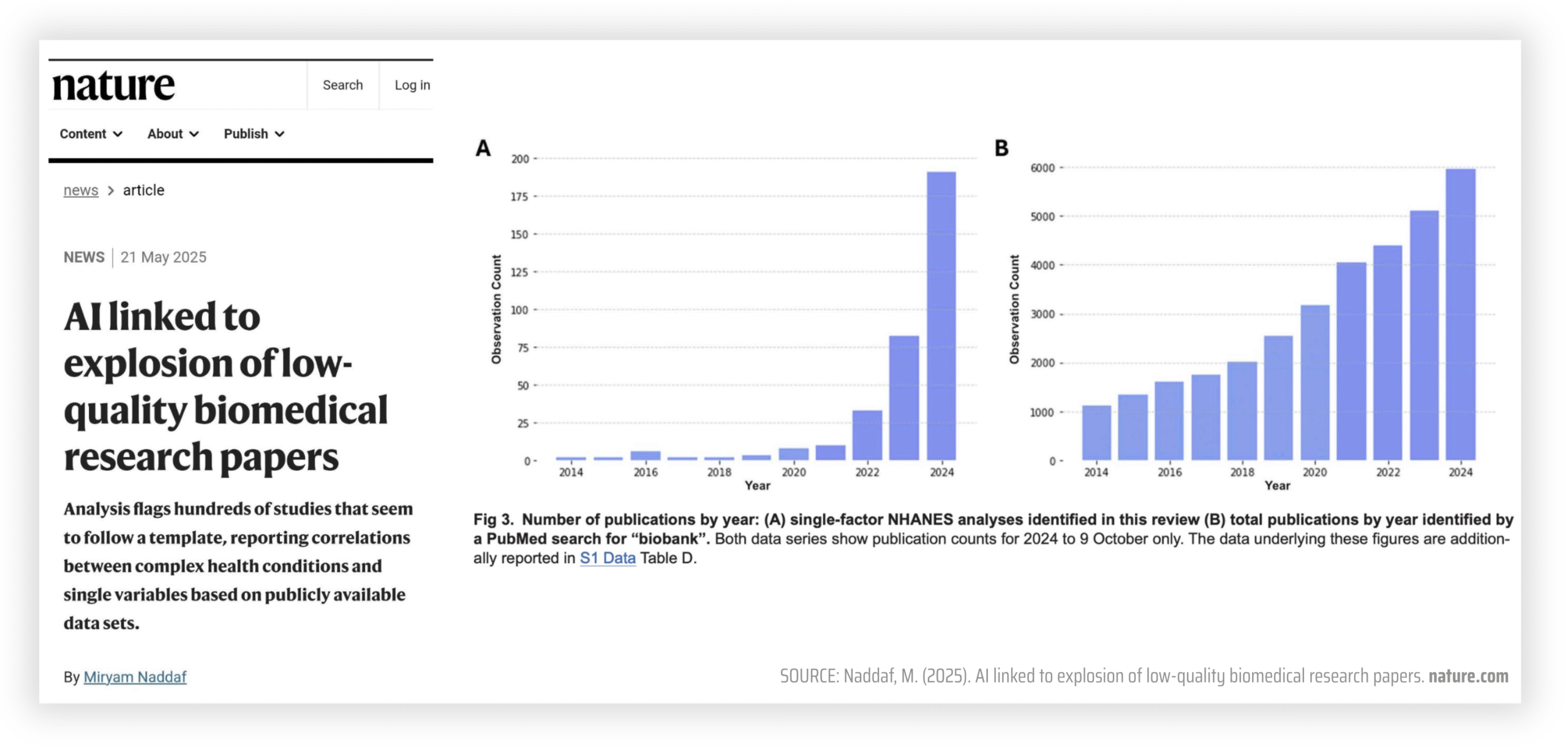

The rampant use of generative AI tools to automate our jobs has driven prices down to the ground and is flooding the web with subpar content that nobody cares enough to think about, research, fact-check, write, design, develop, or promote.

And it’s no surprise considering that it feels as if every platform and tool we use has been pushing AI features on us nonstop. Heck, even the UK government has been reported to have discussed a £2bn deal with OpenAI to give the entire country access to ChatGPT Plus — access none of us asked for.

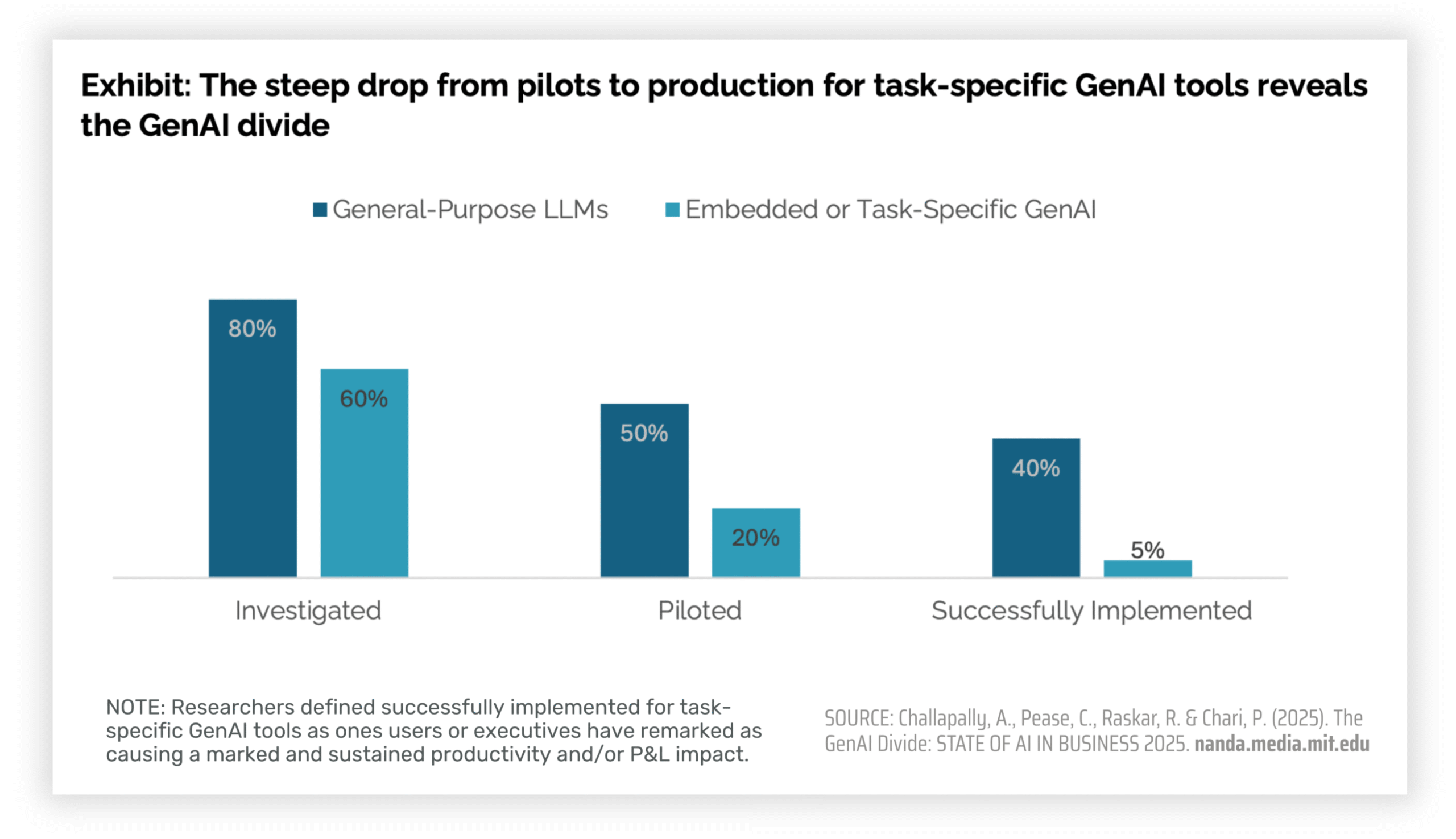

What place does this leave for a small business that refuses to become part of the 95% of companies that waste valuable time, money and energy in AI integration just to see zero benefit?

In this article, we will share the very real reasons why we bet on human intelligence, regardless of the generative AI hype.

Reason #1: AI is not good for our people

AI-assisted work has been shown to weaken critical thinking and lead to dependency.

The findings on the impact AI tools have on users’ critical thinking keep piling on:

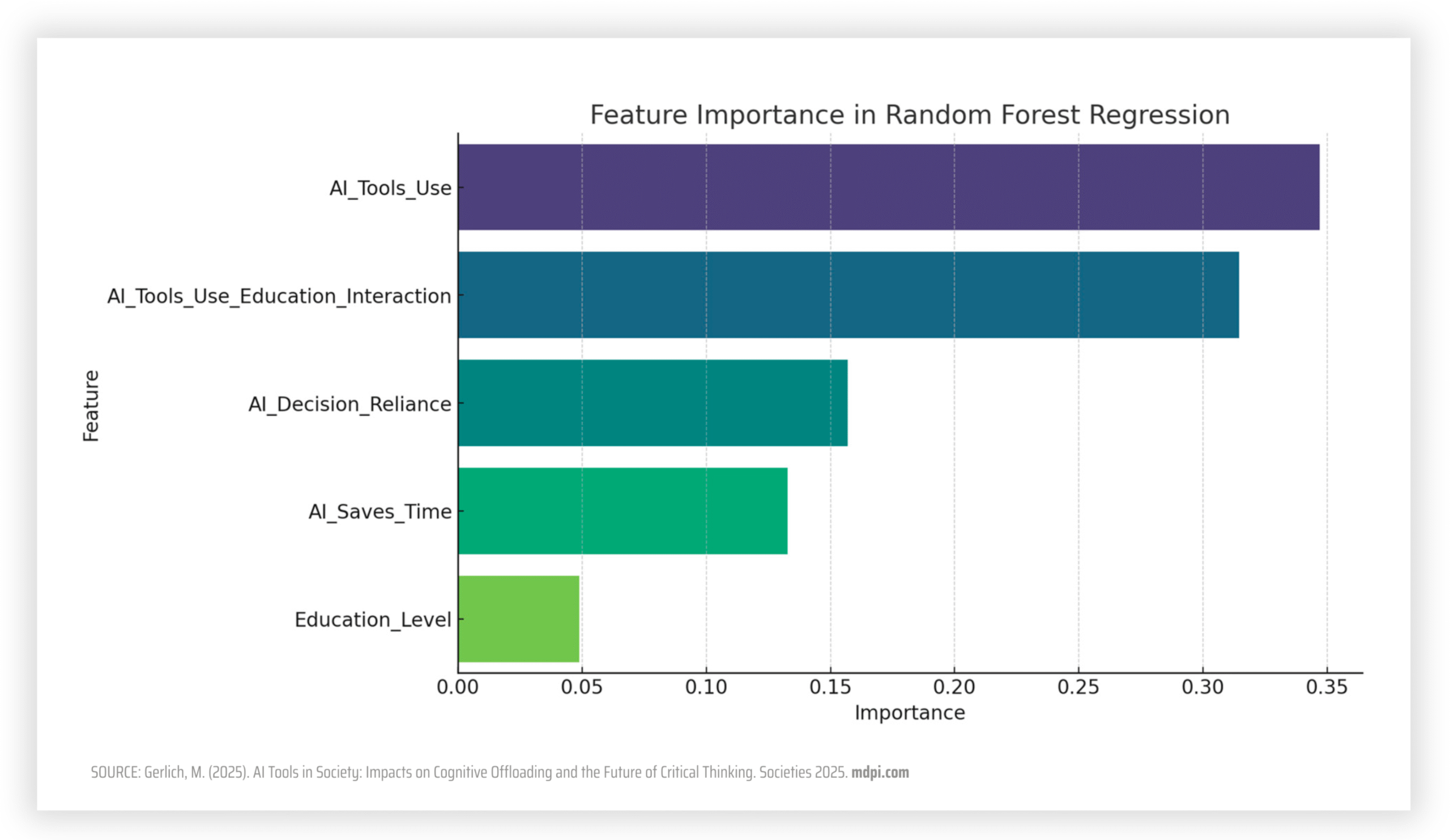

- A January 2025 study published in the journal Societies revealed a significant negative correlation between frequent AI tool usage and critical thinking abilities, mediated by increased cognitive offloading.

- An April 2025 study from Microsoft found that overreliance in AI tools could hinder critical thinking, diminishing problem solving skills.

- A June 2025 study from MIT showed that participants using LLM chatbots to write essays engaged their brain less and consistently got lazier over time.

But it’s not just our ability to engage our brains to think critically about a topic that is at stake, as multiple studies show that the use of AI tools also leads to dependency:

- The same April 2025 Microsoft study showed that using generative AI tools can potentially lead to long-term overreliance on the tool and diminished skills in the workplace.

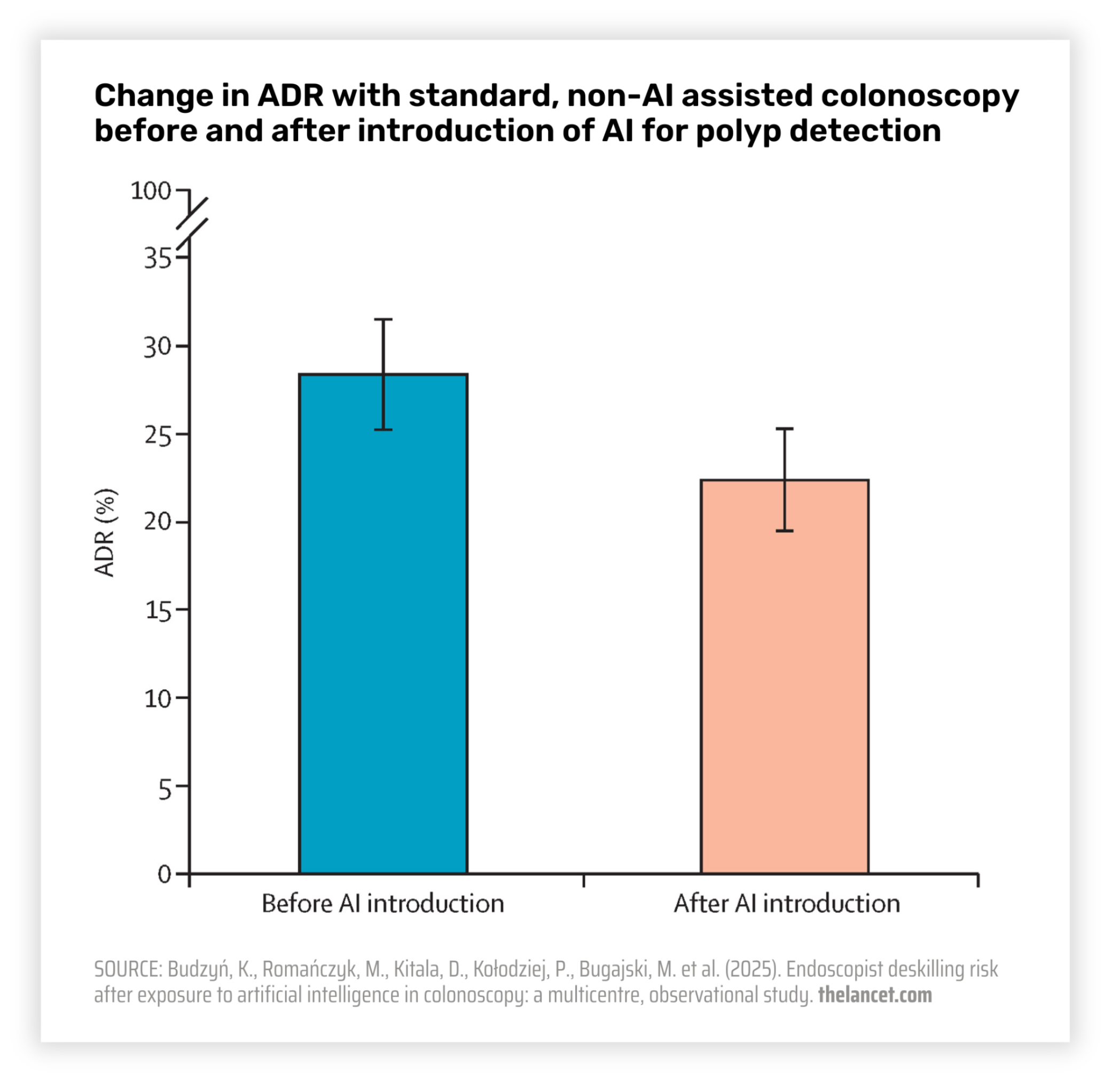

- An August 2025 study published in The Lancet, found that within six months, endoscopists with years of experience in detecting cancer became over-reliant on AI recommendations and their ability to detect tumors on their own decreased.

But there’s more to it, as our team’s creative output could also be at risk.

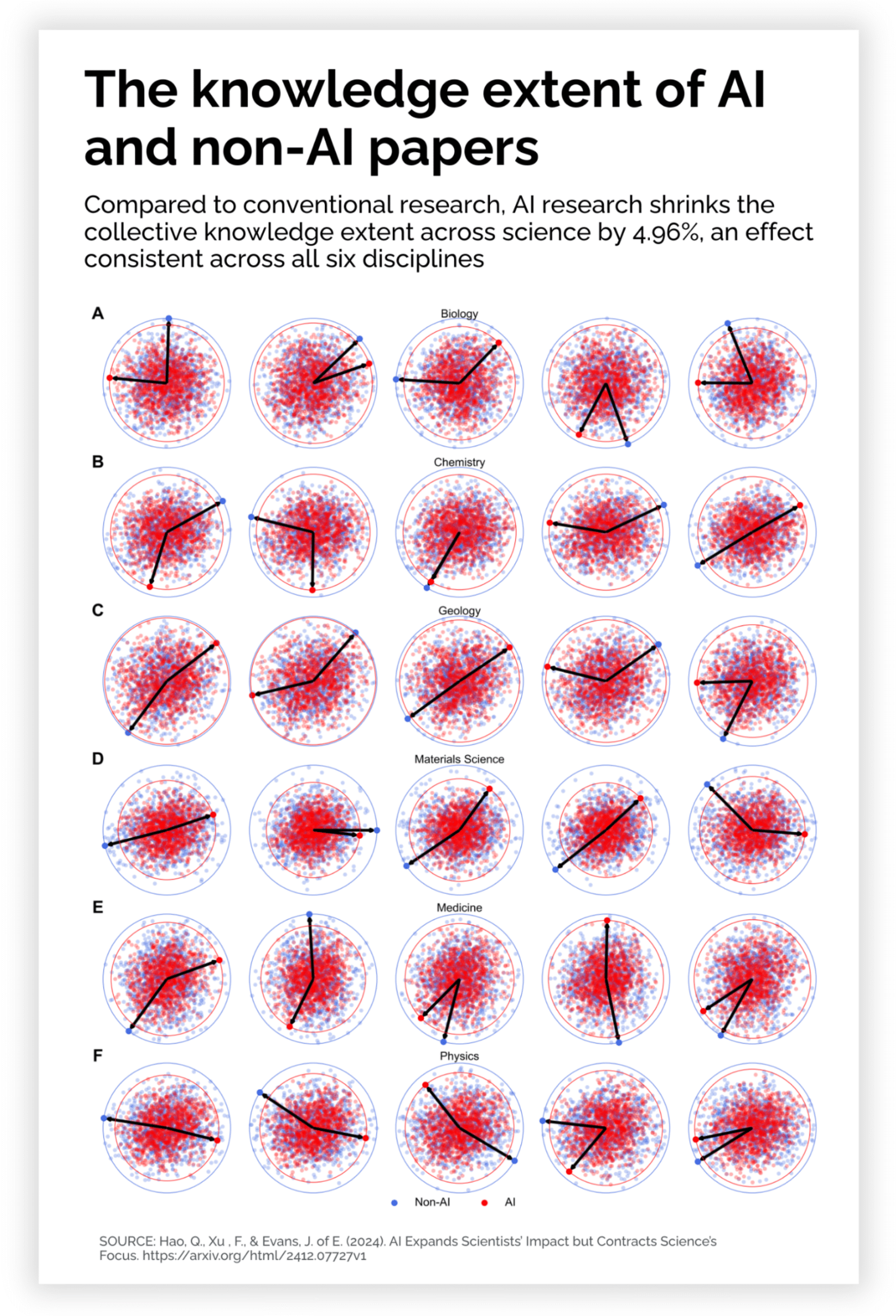

A study published by researchers at the University of Chicago and Tsinghua University showed that scientists using AI are less creative, more repetitive in the topics they approach and less likely to explore answers beyond existing datasets.

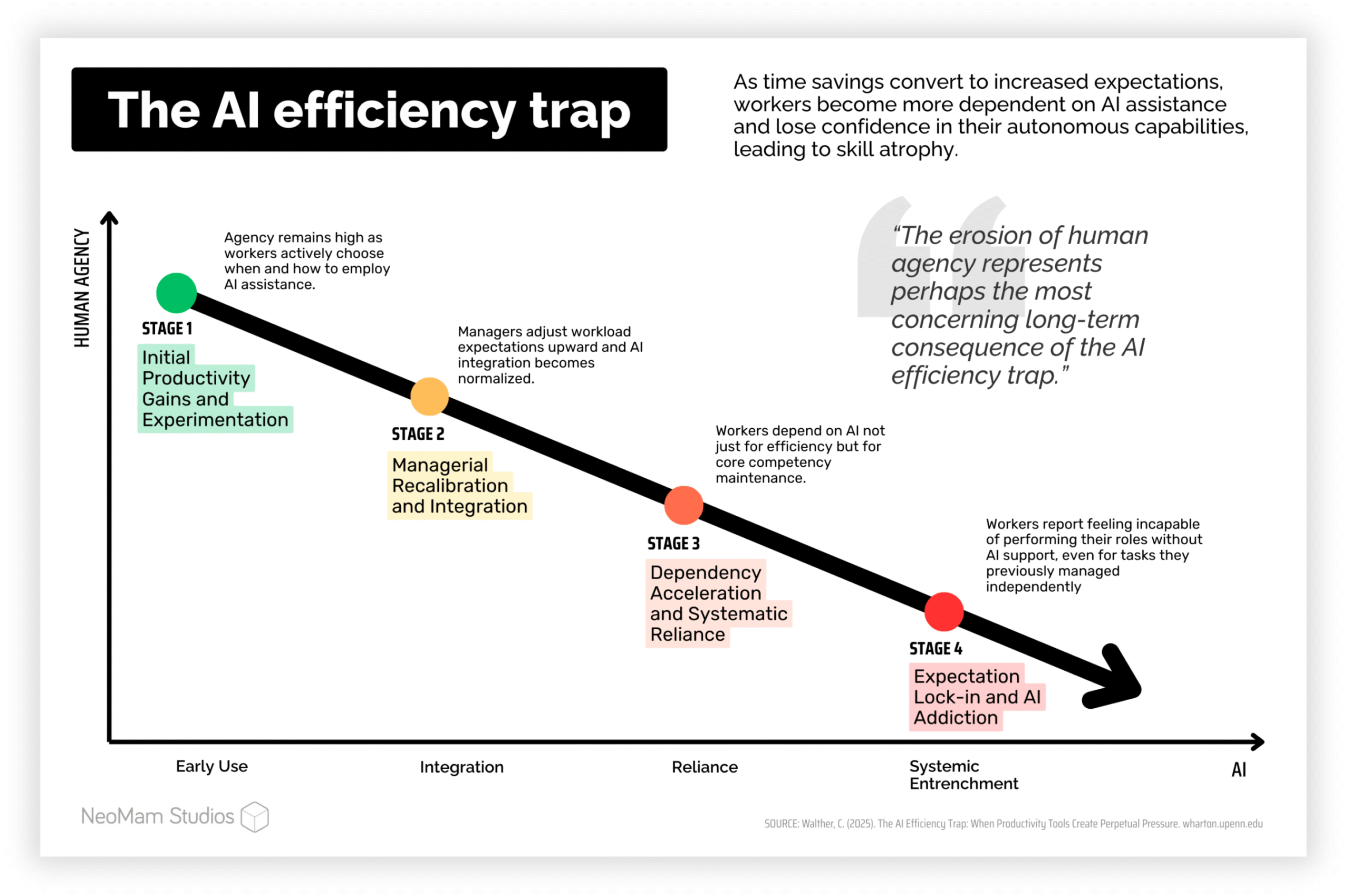

As if these statistics weren’t discouraging enough, Wharton scholar Cornelia Walther explains that workers using AI risk being caught in an efficiency trap that consistently moves toward higher performance expectations that are only achievable with AI assistance, while their human agency decays and they lose confidence in their autonomous capabilities.

The de-skilling and psychological damage of this AI efficiency treadmill is not worth it for us.

Especially considering that the success of our work hangs on our ability to feel inspired, ask original questions, find new sources of information, build fresh datasets, improve upon our past campaigns, and learn from what went wrong. Introducing AI into the mix could harm all that, and more.

Reason #2: AI is not good for our clients

The output generated by AI chatbots is not only wrong half of the time but also poses privacy and security risks.

Clients come to us to help them produce industry-leading reports, creative campaigns and original datasets. They do this to drive thought-leadership, press coverage, and organic links users will actually click on. In this context, we cannot risk introducing chatbot output riddled with errors.

And it’s not just us saying this.

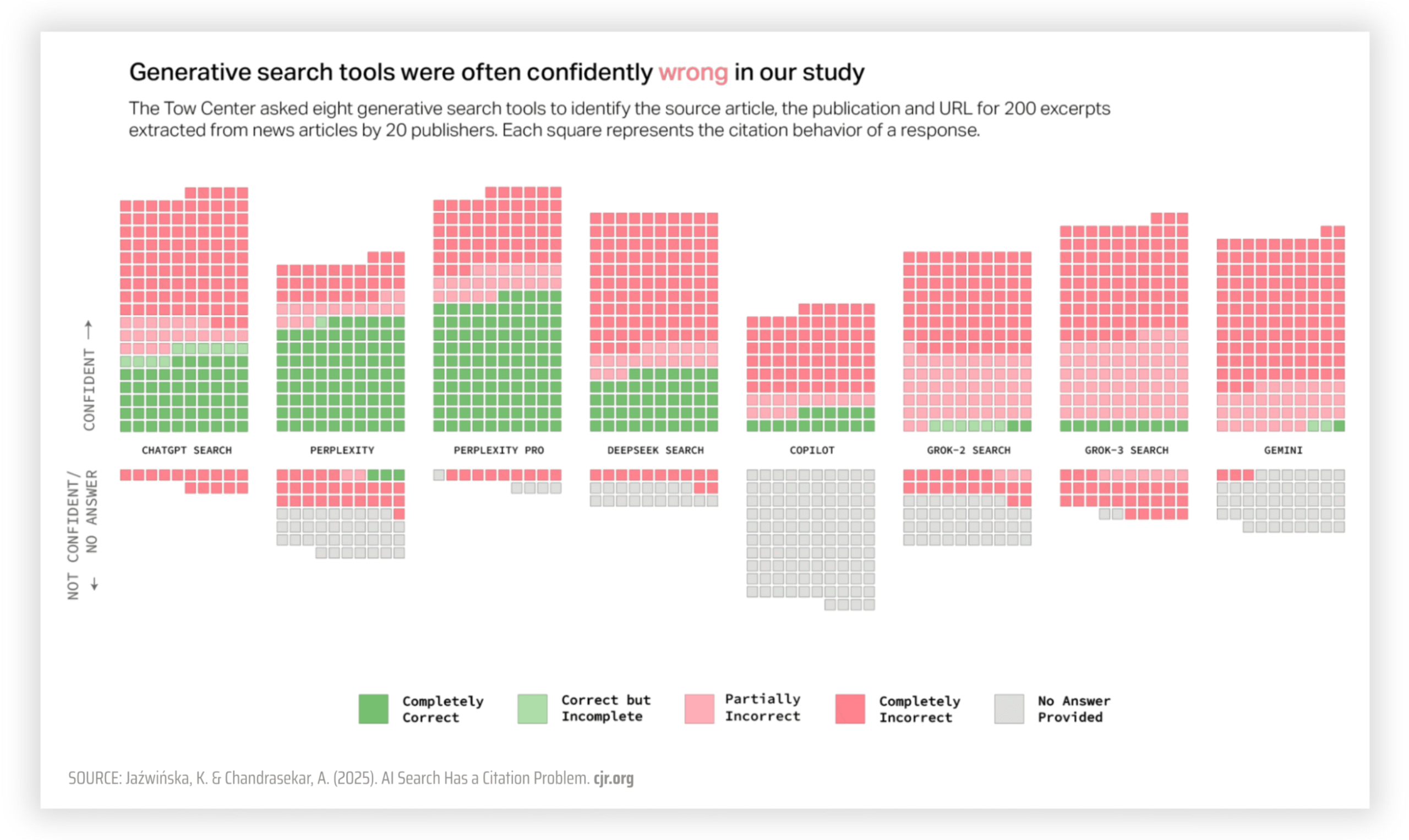

In a recent study, Columbia Journalism Review found that AI chatbots collectively provided incorrect answers (“hallucinations”) to more than 60% of queries when asked to cite and source specific excerpts from articles published across various news publishers — this includes citing fabricated URLs from publishers which actually had content licensing deals in place with the AI companies.

These grim statistics are also coming straight from the horse’s mouth.

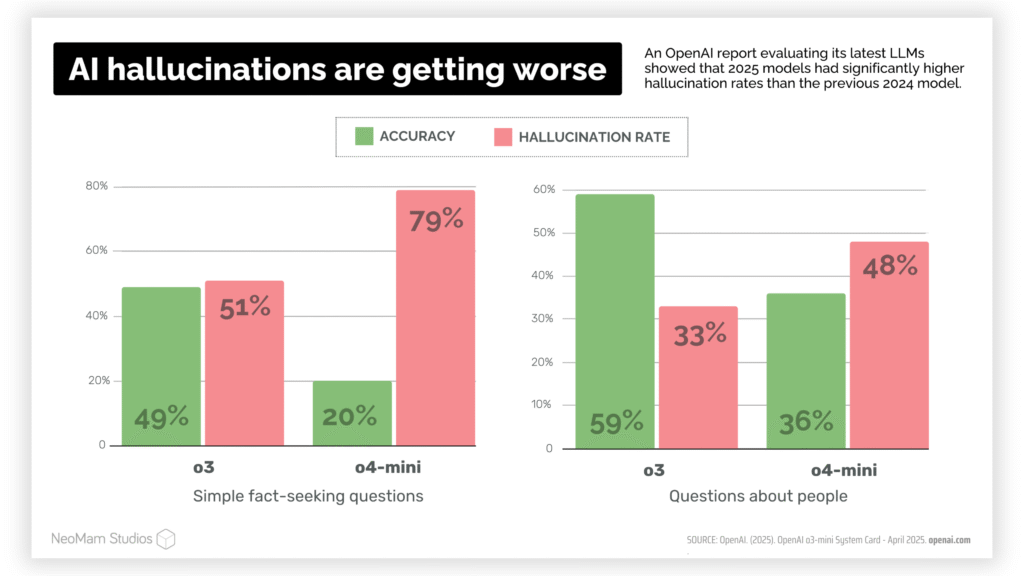

OpenAI has recently reported hallucination rates reaching 33% for their o3 model and 48% for their o4-mini model when addressing questions about people answerable with publicly available facts.

“A.I. is getting more powerful, but its hallucinations are getting worse. A new wave of “reasoning” systems from companies like OpenAI is producing incorrect information more often. Even the companies don’t know why.“

Cade Metz and Karen Weise

The New York Times

Knowing all this, we refuse to rely on AI tools for conducting research or providing factual information.

As an IPSO regulated organisation, we pride ourselves on our journalistic standards and data accuracy. A researcher or data analyst (human or bot) who produces inaccurate information 50% of the time has no place on our team.

But it’s not just about accuracy.

Our clients include publicly traded companies so our work goes through a rigorous review process undertaken by client legal teams: our methodologies must hold water, our research must be robust, and our findings must be reliable.

Considering the amount of time we spend to keep our clients (and their reputations) safe, it wouldn’t be wise to introduce tools that MIT refers to as “a security disaster.”

And this is no exaggeration:

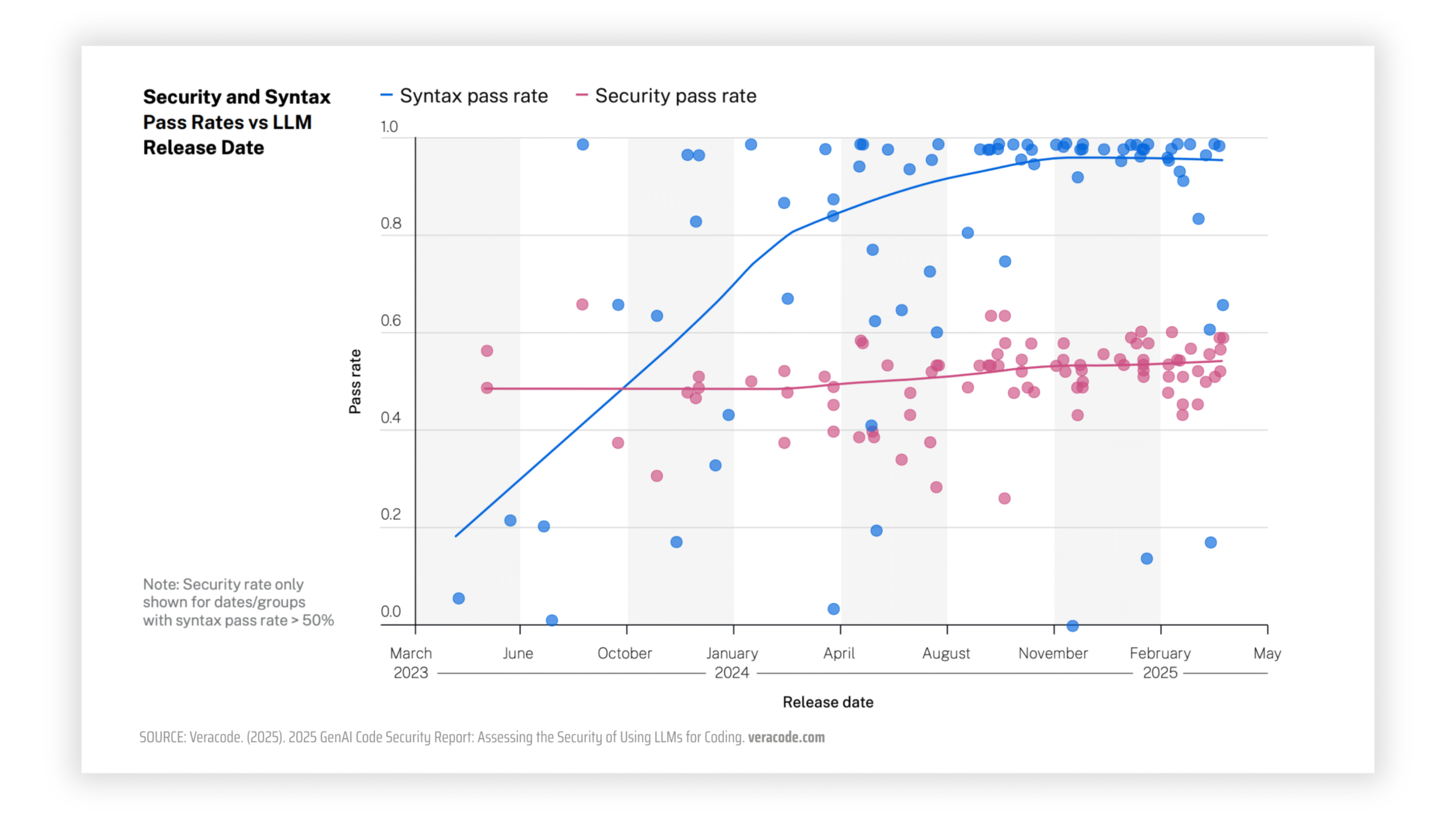

- An August 2025 report from Veracode showed that 45% of AI-generated code contains security flaws despite appearing production-ready.

- This aligned with the August 2025 survey by Checkmarx, which found that 98% of respondents experienced a breach stemming from vulnerable code in the past year.

Avoiding popular vibecoding tools like Cursor ensures hackers won’t be able to inject malicious code into our client projects.

Plus, staying away from consumer AI chatbots also guarantees nobody on our team will inadvertently break an NDA by disclosing confidential data to third parties that could end up being indexed by Google Search for the world to see.

Reason #3: AI is not good for the world

The most hyped AI abilities wouldn’t be possible without the vast amounts of electricity, water and stolen human artwork consumed by the machines.

It’s widely accepted that popular AI models have been trained on stolen work produced by countless writers, journalists, concept artists, filmmakers, musicians, researchers, designers, bloggers, illustrators and other web content creators.

In fact, several lawsuits have been filed against OpenAI, Meta, Anthropic, Perplexity AI, Google, Microsoft, Midjourney, and Stability AI, alleging unauthorized use of copyrighted content to train their generative AI models.

What is more, we are fairly confident that NeoMam works have been used to train some of these tools. Meta reached out to us stating they wanted to license our content but the conversation eventually went cold without any explanation.

“Our stolen work has been what has made a word-prediction algorithm appear human, appear brilliant and full of talent and ideas. AI is nothing without our stolen art.”

Ewan Morrison

We are not useless

The same way we wouldn’t steal copyrighted images, we don’t feel comfortable incorporating chatbot output for client work. That is an ethical concern of ours, and it’s not the only one.

Back in February 2025, we visualised the energy consumption of ChatGPT prompts based on the reported daily users at the time (57.14 million), and the figures shocked us.

We learned that ChatGPT prompts consume enough electricity in one day to power the Empire State building for a year and a half. When we calculated energy consumption over a year, we found that ChatGPT consumes more electricity than 117 countries annually just to provide answers to user prompts.

Not only that.

Assuming users engage the chatbot five times per day, this will lead ChatGPT to consume as much water daily as the entire population of Taiwan flushing their toilet at once. Extrapolate this over a month and ChatGPT will consume enough to give everyone on the planet two full glasses of water.

One could argue that everything will harm the environment if you look deep enough, but we find it hard to justify prompting a generative AI tool when we know the cost is this high.

Reason #4: AI is not good for the web

As Google said, “the open web is in rapid decline,” so why make it worse?

The AI industry not only trains its models through copyright infringement but also relentlessly crawls websites at the expense of their ability to stay online.

These crawlers are so hungry for human works that they are taking down the websites of museums and libraries, and even threatening Wikipedia’s server stability. And it’s not just scraping bots that are overwhelming web servers, a recent report showed that one AI fetcher bot made 39,000 requests per minute to a single website in order to deliver real-time responses.

At a time when websites are already struggling to keep the lights on, AI bots are not only scraping their content without providing fair value for what they take but also pushing web hosting costs up.

Just to name one example, the software documentation site Read the Docs stated they spent over $5,000 in bandwidth charges after one crawler downloaded nearly 10 TB of zipped HTML files in a single day.

This is unsustainable, and for what? To generate mostly incorrect answers to questions we already had accurate answers to?

While AI crawlers are threatening the stability of the web, the output of AI tools is polluting it.

Three years after the release of ChatGPT, we are all drowning in AI slop in the form of low-quality, low-effort AI-generated images, text, videos, and even entire websites.

In fact, as of May 2025, NewsGuard has identified 1,271 AI-generated news and information sites spanning 16 languages that are operating with little to no human oversight.

Now Facebook is full of AI slop, X is full of AI slop, Reddit is full of AI slop, Pinterest is full of AI slop, LinkedIn is full of AI slop, TikTok is full of AI slop, Kindle is full of AI slop, and Google… well, Google is actively generating AI slop.

And it gets worse.

This slop has already started to spread into real life, as thousands of Dubliners discovered on October 31st, 2024 when they flocked to the city centre for a Halloween parade that didn’t exist, promoted on an AI-generated website. It’s gotten to the point where public libraries are being overrun with crappy AI-generated books.

In this context, creating insightful, accurate, human content that adds value to the web is more important than ever.

But what about the competitive advantages of using AI?

We must be mad not to want to squeeze some of those sweet productivity and efficiency gains…

The biggest, most revered management consulting and accounting firms in the world have spent the last three years publishing report upon report announcing increasingly more incredible business benefits resulting from AI and automation:

- A June 2023 report from McKinsey stated that “AI has the potential to automate work activities that absorb 60 to 70% of employees’ time.”

- A May 2024 report from PwC found that “sectors more exposed to AI experience 4.8 times greater labour productivity growth relative to those that are less exposed.”

- A December 2024 survey from Deloitte showed that “almost all organisations report measurable ROI with GenAI.”

- A January 2025 report from McKinsey sized the “long-term AI opportunity at $4.4 trillion in added productivity growth potential” from corporate use cases.

On top of that, we have seen every Tech Giant rolling out AI features into every single tool and platform we use in the workplace — in some cases, making it impossible for users to disable them.

Between the glowing reports from the likes of McKinsey and the borderline invasive push from the likes of Google, it makes complete sense for business owners and bosses around the world to dedicate ample resources to figuring out how to unlock some of these incredible benefits from AI.

When narrowing the focus to what businesses in our space are doing, it becomes clear that AI output has become commonplace, too:

- A December 2024 survey from the American Marketing Association showed that 28% of marketing professionals are using generative AI for visual storytelling and design.

- A January 2025 survey from MuckRack found that 59% of PR professionals use generative AI for research.

- A 2025 PRWeek UK survey found that 45% of the agencies surveyed use AI for writing copy.

However, after reading countless studies, reports and opinion pieces, we believe any short-term gains for our business will lead to long-term pain for the humans in the machine and diminishing results for our clients.

Plus, when it comes to what we do at NeoMam, the productivity gains are simply not there.

Today is not the day that AI chatbots and agents are better at doing our jobs than we are. Generative AI is lukewarm alphabet soup in comparison: a flattened aggregate of the world’s content and the average result of trillions of data sets.

So trying to prompt our way into getting a workable version of what clients pay us to do is simultaneously the most inefficient and the least inspiring option.

Does this make us the New Luddites?

When going against the grain means your 100% digital business can be branded as a bunch of technophobes.

It has taken us some time to just come out and say we prefer to invest in human intelligence over AI because we were afraid this would turn us into pariahs.

We worried potential customers would walk away from NeoMam because we refuse to give in to the AI hype at a time when virtually every other agency in our industry is heavily advertising AI services, tools and agents.

The reality is that whether or not we agree with generative AI, one of our responsibilities to our clients is to ensure that our campaigns can be found and, in 2025, this includes being discoverable through AI answer engines like ChatGPT or Google AI Mode. Ironically, these AI chatbots seem to love our in-depth human content.

And we are not throwing the baby out with the bath water either.

We have been using neural networks, machine learning and tools like AI facial analysis as part of our creative campaign work since before ChatGPT was launched. So we know from experience that there are many useful, helpful and interesting applications to these technologies.

However, we disagree with the general sense of urgency to make generative AI work for our business as a tool for automating our team’s jobs to the point of replacing them altogether.

We are a group of people who love what we do for a living and have honed our skills over more than a decade. If we are being honest, we are not in a hurry to offload our talent to feed somebody else’s machine.